Emmanuel Biau

University of Liverpool

e.biau@liverpool.ac.uk

I am a Research Fellow currently funded by a Tenure-Track Fellowship at the University of Liverpool.

About me

In 2009, I graduated a Master in Biology focusing on Neuroscience at Pierre and Marie Curie University (Paris). In 2015, I obtained a PhD in Biomedicine at Pompeu Fabra University (Barcelona) under the supervision of Salvador Soto-Faraco. During my PhD, I investigated the role of speaker's hand gestures on speech perception and audio-visual integration. In 2016, I moved to Maastricht University to hold a postdoc position as a Marie Skłodowska-Curie fellow under the supervision of Sonja Kotz. My project addressed how listeners naturally map rhythmic visual and auditory prosodies during speech perception. Later in 2018, I joined Simon Hanslmayr's team at the University of Birmingham (UK) after being awarded a Sir Henry Wellcome postdoctoral fellowship to study the role of audio-visual synchrony on theta rhythms during speech perception and memory. I am now establishing as an independent researcher at the University of Liverpool.

Line of research

Speech memories are like the internal movies of our lives, allowing us to replay conversations we had with friends or to anticipate future responses from colleagues. Unlike movies however, it is unclear how the brain merges information from the senses and forms new memories during speech encoding. This is the question that I am committed to answer in my personal line of research.

Main research interest

I aim to understand how the tight synchrony between visual and auditory information on certain rhythms in speech predicts multisensory perception and new memories, with a main focus on the role of brain oscillations. My research articulates upon the key steps taking place in the brain during audio-visual speech perception:

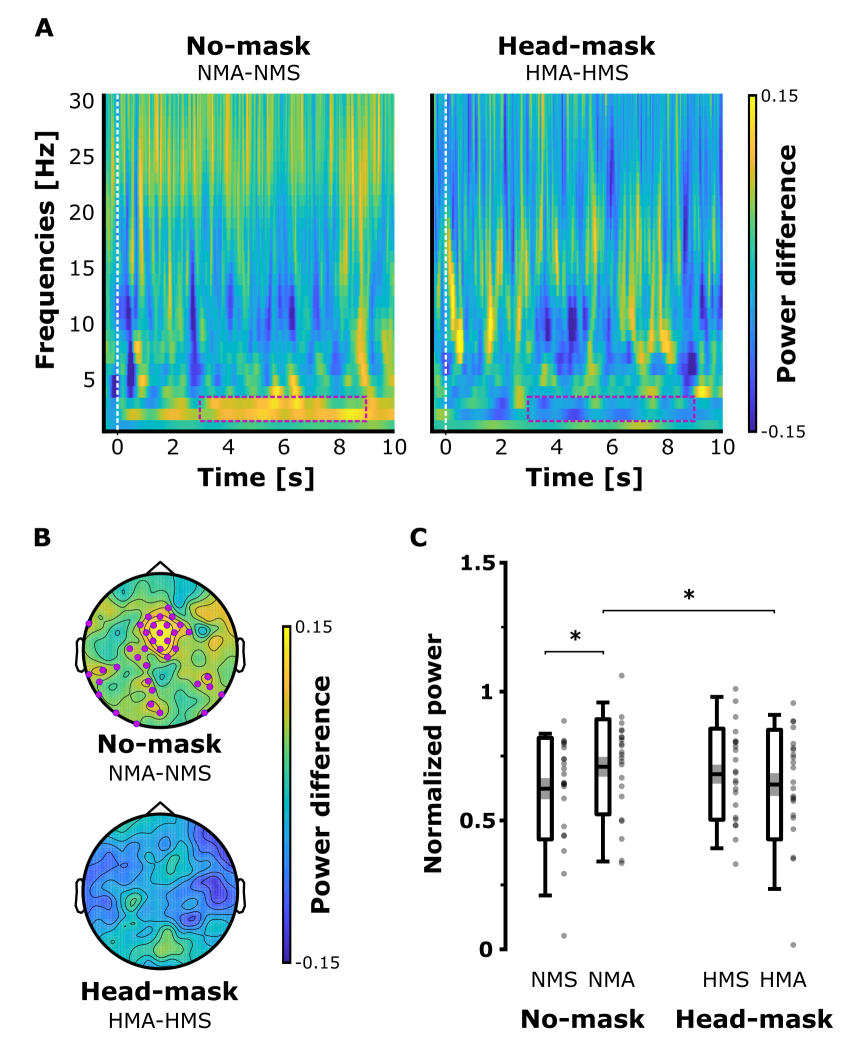

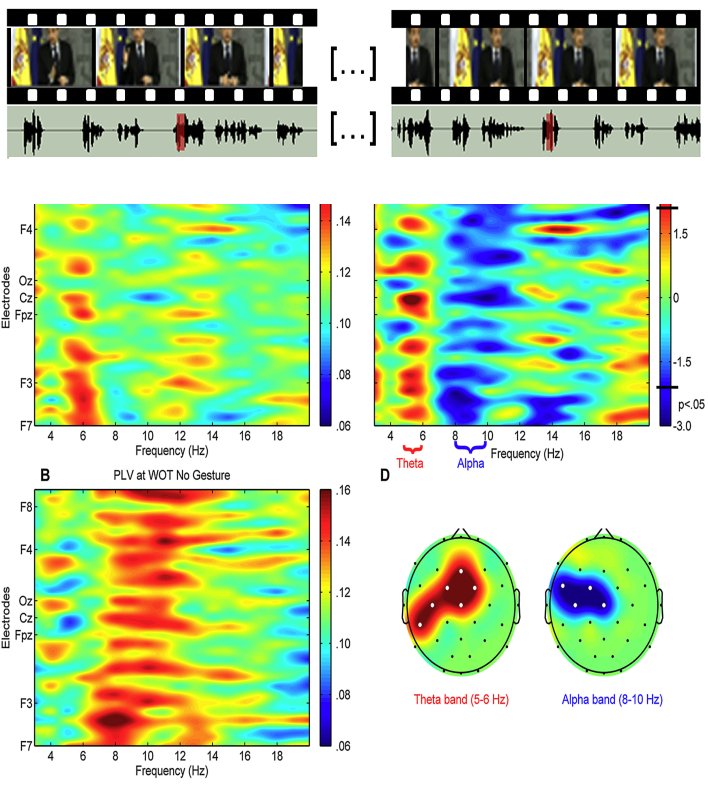

- Processing separately two simultaneous streams of information conveyed in distinct visual and auditory modalities;

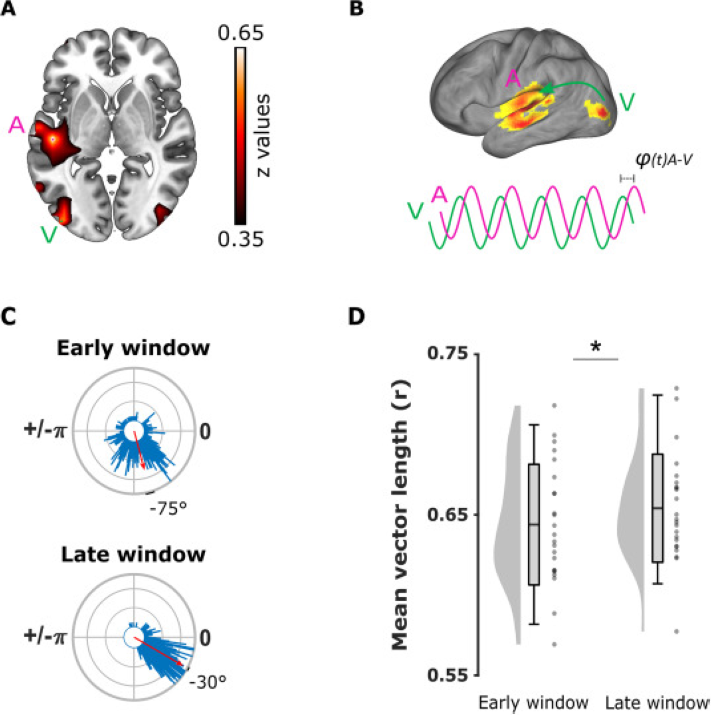

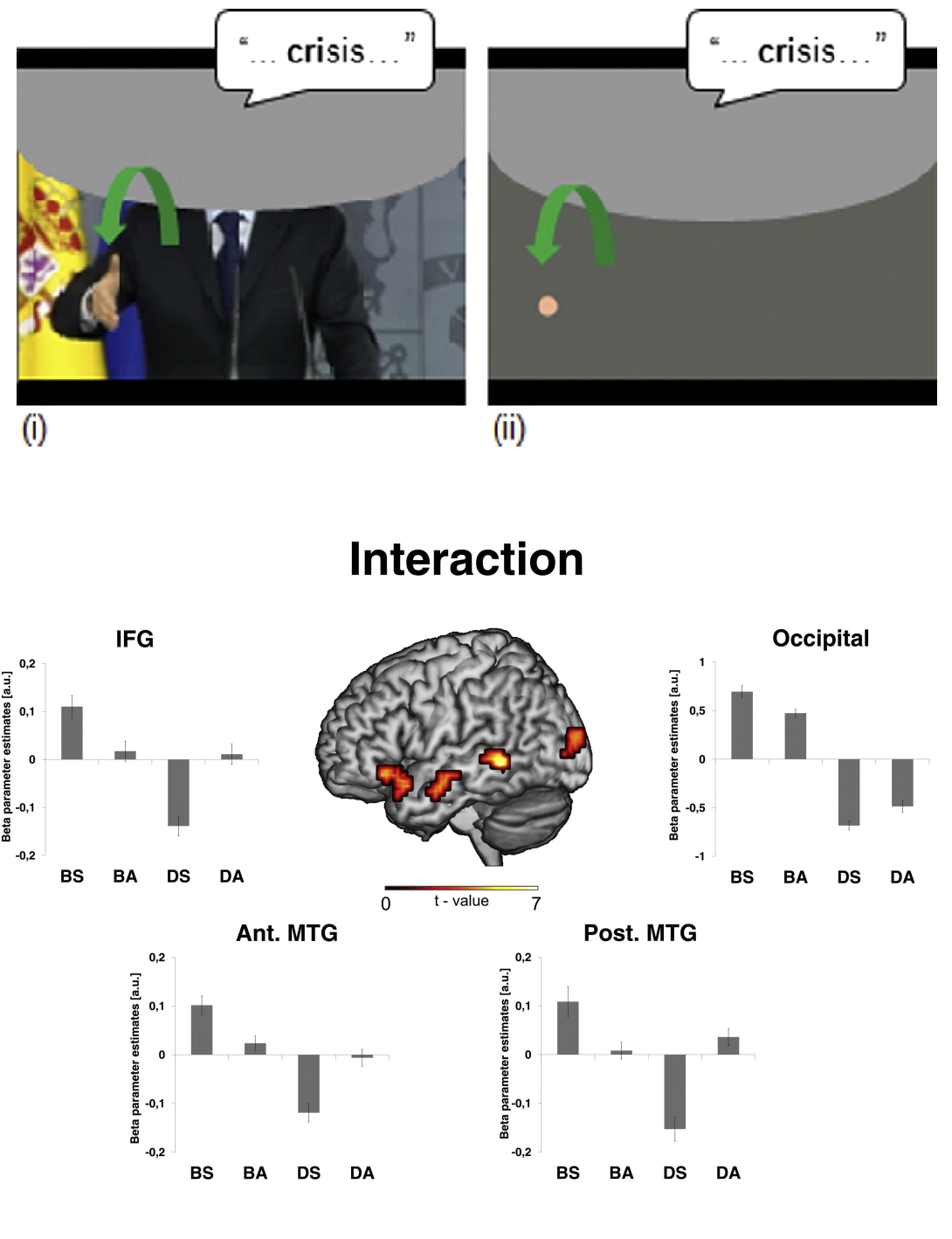

- Integrating information to produce a unified representation of speech in the brain;

- Forming and storing a new trace of speech in memory somewhere in the brain;

- Recollecting speech memories at any time (think about your favorite movie dialogues).

Approach

I combine the presentation of naturalistic stimuli with behavioural tasks to a broad range of techniques in order to establish the neural bases of multisensory speech perception and subsequent memories:

- Electro- and Magnetoencephalography (EEG/MEG);

- Neuroimaging (fMRI);

- Intracranial EEG (iEEG) recordings with epilepsy patients;

- Developing computational models integrating my empirical findings to predict neural responses during multisensory speech perception.